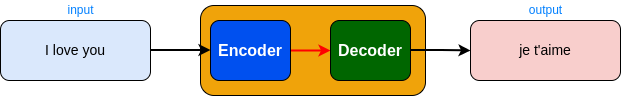

Let’s take a high-level glance at the Transformer block in the Translation (English-French) section before getting into it in depth.

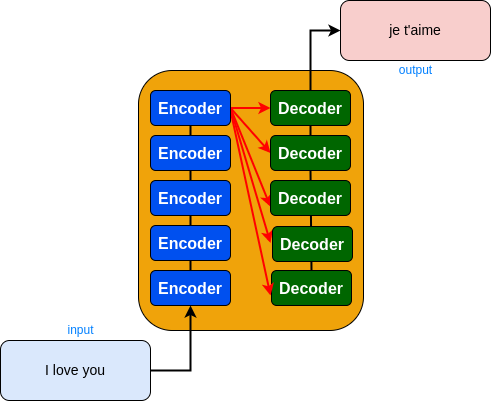

Opening up that hot block, we see some encoding and decoding blocks.

Each of the encoding and decoding components is a stack of encoders and decoders that have been built upon each other!

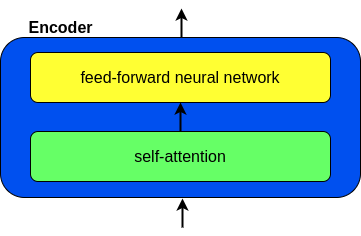

Because the encoders are structurally identical, they don’t share weights. Self-attention and a feed-forward neural network are two levels of the encoder. While encoding a given word in a sequence, the self-attention layer will warn the encoder to take other words (context) into account. (That is, consider the context of the words around it.) Then, based on a big corpus of text, a feed-forward neural network tries to figure out what’s going on inside the block. However, it should be noted that each position uses the exact same feed-forward network. Don’t worry if you don’t get it!! In the parts that follow, we’ll go through every single detail. 💆♀️

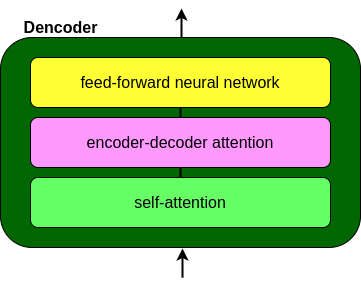

Both of those layers are included in the Decoder, but in between them is an additional attention layer that aids the decoder in focusing on key sections of the input phrase.

Now that you’ve seen the major components of the model, let’s see how we prepare words to feed to the model. Obviously, they cannot be injected the way they are in natural language, right? Ya ask why?🧐 Because this is a mathematical model and algorithm which only understands the numeric version of inputs! So in order to our model to understand words, they gotta be in the numeric versions, right? Ya ask how words can convert to numbers?🧐 Continue to section 1 of this blog to figure out how. 😉